Robot Grasping in Cluttered Environment

with Active Exploration

Yuhong Deng*, Xiangfeng Guo*, Yixuan Wei*, Kai Lu*, Bin Fang, Di Guo, Fuchun Sun, Huaping Liu$

* denotes equal contribution

$ Corresponding author: hpliu@tsinghua.edu.cn

All authers are from Department of Computer Science and Technology, Tsinghua University, Beijing, China

Abstract

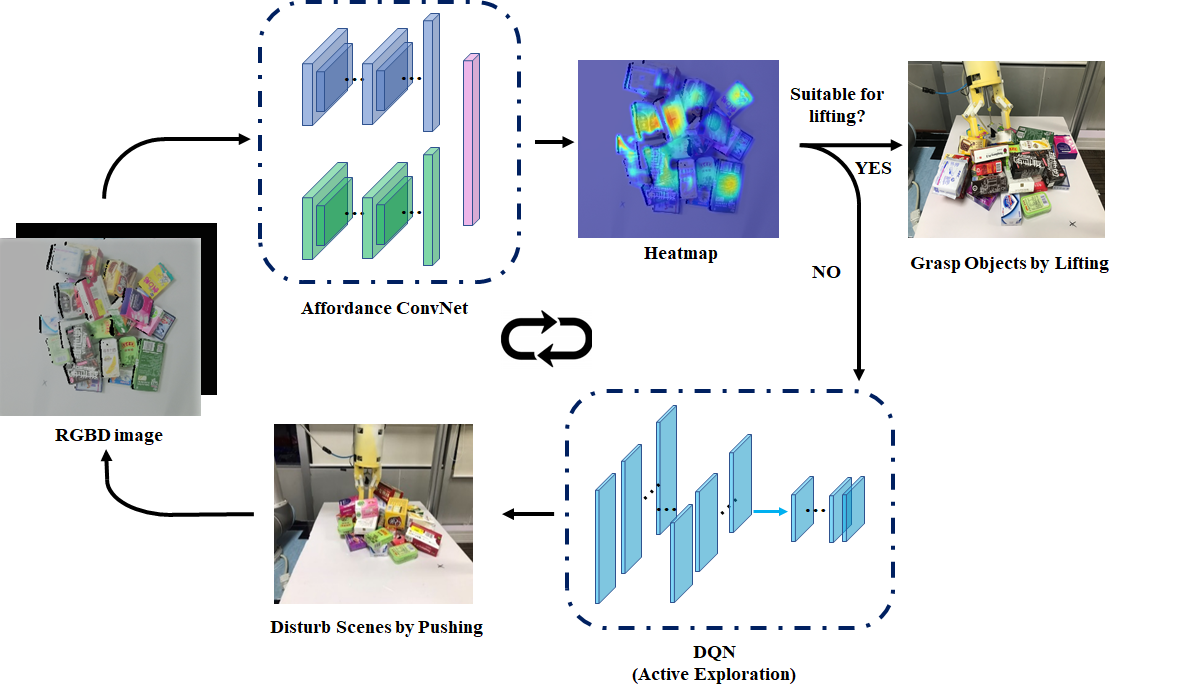

In this paper, a novel robotic grasping system is established to automatically pick up objects in a cluttered scene. A composite robotic hand which seamlessly combines a suction cup and a gripper is firstly designed. When grasping an object, the suction cup is used to lift the object from the clutter and the gripper can grasp the object accordingly. And then, resorting to the active exploration, a deep Q-Network (DQN) is employed to help obtain a better affordance map, which can provide pixel-wise lifting candidate points for the suction cup. Besides, an effective metric is designed to evaluate the current affordance map and the robotic hand will keep actively exploring the environment until a good affordance map is obtained. Experiments have demonstrated that the proposed robotic grasping system is able to largely increase the success rate when grasping objects in a cluttered scene.

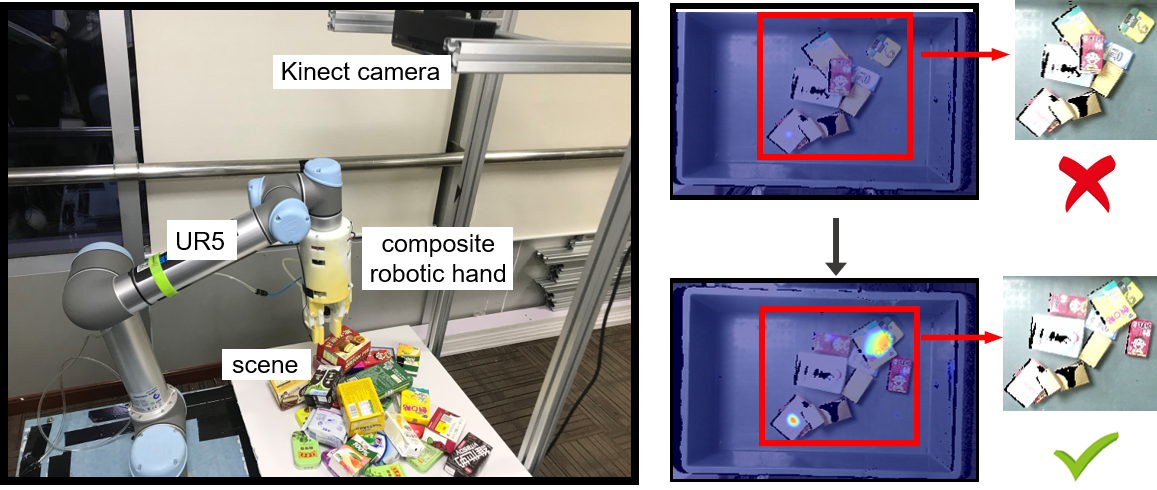

Our grasping system consists of a composite robotic hand for grasping, a UR5 manipulator for reaching the operation point, and a Kinect camera as a vision sensor. We introduce the strategy of active exploration applied on the environment for more promising grasping.

Summary Video

Pipeline

The pipeline of the proposed robotic grasping system is illustrated in the aboved image. The RGB image and depth image of the scene are obtained firstly. The affordance ConvNet is used to calculate the affordance map based on both images. A metric Phi is proposed to evaluate the credibility of the current affordance map. If Phi satisfies the metric, the composite robotic hand will implement the grasp operation. Otherwise, the obtained RGB image and depth image are fed into the DQN, which guides the composite robotic hand to give the environment an appropriate disturbance by pushing objects. This process will be iterated until all the objects in the environment are successfully picked.

Example Results

Characteristics of Grasp Process

Compared with other suction grasping systems, the proposed composite robotic hand uses the two fingers to hold the object after the suction cup lifts the object, which increases the stability of the grasp:

Robotic Experiments

We test our DQN model on real environment, including a Microsoft’s Kinect V2 camera as the image acquisition tool to get the RGB image and depth image of the scene and a UR5 manipulator to carry our composite robotic hand. We select 40 different objects to build different scenes for our robotic hand to grasp.

Contact

Have any questions, please feel free to contact Yixuan Wei

March 19, 2019

Copyright © Yixuan Wei